This is a follow up article to activities started with the migration from Arch Linux to Artix Linux distribution. Since the switch over to OpenRC based distribution was successful, I decided to move on with hardware changes soon after.

To get additional SATA ports I was forced to replace my AD3INLANG-LF LAN expansion daughterboard with the ADPE4S-PB Marvell SATA controller (it is based on Marvell 88SE6145-TFE1controller). I was a bit reluctant about this decision, since I was facing constant boot issues in the past with it. It also needs special configuration to make it work on Linux. Moreover, the Jetway proprietary daughterboard connector makes it challenging to insert the board firmly. Finally, loosing LAN ports meant that I can't use network aggregation without additional PCI card. However, the only alternative was to buy a new PCI SATA controller with at least 3 SATA connectors, which is getting harder to find and the prices are not too attractive in my region. Additionally, it's hard to predict if such decision won't bring new problems along the way. So, I decided to go with the daughterboard exchange.

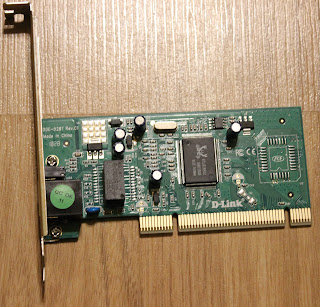

In order to keep network aggregation possible I needed to do one purchase. Since Intel PRO/1000 GT PCI network card from my stockpile is not compatible with the ADPE4S-PB daughterboard due to firmware clash, I needed to buy another one without network boot support. For that purpose I acquired the D-Link DGE-528 (Rev. C1) network card which is based on Realtek RTL8169SC NIC. It costed me around 7.5€ and kept the goal for minimal spendings.

Additionally, I reused one back plate panel containing 2xSATA to eSATA connectors with one 4-pin Molex power connector, one SATA and one eSATA to SATA cable. All of them I had in the pile of my parts.

Once cabling was finished, I spent quite a long time to properly attach the daughterboard itself. Like in the past experience, the boot process was very unstable, but after multiple attempts I reached a quite reasonable stability with most of boot attempts successful. Unfortunately, I noticed one regression over Delock controller: attempts to read Kingston SSD temperature with hddtemp utility was causing it to fail (with the reboot required).

The easiest part of the assembly was attaching D-Link network card to PCI slot and cable cleanup. As expected the DGE-528 didn't clash with the daughterboard and it is well supported by Linux. Integrated SATA controller needed to be switched to IDE mode to avoid the clash as well.

RAID0 Configuration

The main goal was to setup two 3TB hard drives into RAID0 mode, so that they will be available as one virtual 6TB drive. This volume would match with my newly bought 6TB drives and act as yet additional backup storage. To achieve this, I tried to use Marvel firmware first, but unfortunately it was freezing up on RAID creation attempt. I couldn't figure out the reason, but it didn't seem like it was a capacity limit, since 6TB hard drives were detected by the controller without issues. Some drive incompatibility looked like more plausible explanation but I had no means to test and verify my assumptions. As a consequence, I decided to try to setup LVM managed RAID instead. Using two sources from Gentoo Linux wiki and Red Hat Linux documentation I successfully configured my LVM RAID0 by using these commands:

Motivation

The major goal was to reuse the replaced 3TB hard drives into RAID0 setup as additional backup storage, those combined capacity would match to newly acquired hard drives. I faced some challenges during implementation:- Akiwa GHB-B05 Mini-ITX case can only physically fit two 3.5'' hard drives, and both places were already occupied by the main two hard drives.

- There are only two SATA connectors available on the Jetway JNF-76 motherboard.

- I wanted to keep using network aggregation, thus I need to have two Ethernet ports available. The motherboard has only one, though.

- If possible, I wanted to avoid additional expenses or keep them to a minimum.

The plan

To get additional SATA ports I was forced to replace my AD3INLANG-LF LAN expansion daughterboard with the ADPE4S-PB Marvell SATA controller (it is based on Marvell 88SE6145-TFE1controller). I was a bit reluctant about this decision, since I was facing constant boot issues in the past with it. It also needs special configuration to make it work on Linux. Moreover, the Jetway proprietary daughterboard connector makes it challenging to insert the board firmly. Finally, loosing LAN ports meant that I can't use network aggregation without additional PCI card. However, the only alternative was to buy a new PCI SATA controller with at least 3 SATA connectors, which is getting harder to find and the prices are not too attractive in my region. Additionally, it's hard to predict if such decision won't bring new problems along the way. So, I decided to go with the daughterboard exchange.

In order to keep network aggregation possible I needed to do one purchase. Since Intel PRO/1000 GT PCI network card from my stockpile is not compatible with the ADPE4S-PB daughterboard due to firmware clash, I needed to buy another one without network boot support. For that purpose I acquired the D-Link DGE-528 (Rev. C1) network card which is based on Realtek RTL8169SC NIC. It costed me around 7.5€ and kept the goal for minimal spendings.

Additionally, I reused one back plate panel containing 2xSATA to eSATA connectors with one 4-pin Molex power connector, one SATA and one eSATA to SATA cable. All of them I had in the pile of my parts.

Assembly

Initially, I made sure that I have enough power cables and I can extend them long enough outside the case, so that hard drives would stand vertically, stable and still with enough room for cables not to be stretched. Fortunately, I still had one free Molex connector, which I used to connect the back panel's power connector. It made it extremely easy to connect one of two hard drives, since both power connector and eSATA connector were redirected outside the case. However, the second one couldn't be connected in the same manner, because it needed one more power connector. Fortunately the PSU had four SATA power connectors, out of which one was long enough to go outside the case and reach the hard drive. So in the end, I had all four PSU's SATA power connectors used (1xSSD, 3xHDD) plus one Molex connector used for the back panel's power cable extension (1xHDD). The back panel also provided ability to attach one of the hard drives using eSATA to SATA cable. Since I had only one such cable, I used long SATA cable to attach the second hard drive from inside of the case. |

| Externally placed hard drives cabling |

|

| Cabling at the back plane |

The easiest part of the assembly was attaching D-Link network card to PCI slot and cable cleanup. As expected the DGE-528 didn't clash with the daughterboard and it is well supported by Linux. Integrated SATA controller needed to be switched to IDE mode to avoid the clash as well.

|

| Hard drives in external casing |

RAID0 Configuration

The main goal was to setup two 3TB hard drives into RAID0 mode, so that they will be available as one virtual 6TB drive. This volume would match with my newly bought 6TB drives and act as yet additional backup storage. To achieve this, I tried to use Marvel firmware first, but unfortunately it was freezing up on RAID creation attempt. I couldn't figure out the reason, but it didn't seem like it was a capacity limit, since 6TB hard drives were detected by the controller without issues. Some drive incompatibility looked like more plausible explanation but I had no means to test and verify my assumptions. As a consequence, I decided to try to setup LVM managed RAID instead. Using two sources from Gentoo Linux wiki and Red Hat Linux documentation I successfully configured my LVM RAID0 by using these commands:

- Install and start LVM service:

- sudo pacman -S lvm2 lvm2-openrc

- sudo rc-update add lvm boot

- sudo /etc/init.d/lvm start

- Create LVM physical volumes and volume groups

- sudo pvcreate /dev/sda1 /dev/sdc

- sudo vgcreate nasbackupvg /dev/sda1 /dev/sdc

- sudo vgs #to check

- sudo vcreate --type raid0 -l 100%FREE -n nasbackuplv nasbackupvg

- Format and mount volume using XFS filesystem:

- sudo mkfs.xfs /dev/nasbackupvg/nasbackuplv

- sudo mkdir /mnt/raid

- sudo mount -t xfs /dev/nasbackupvg/nasbackuplv /mnt/raid

- Edit /etc/fstab by adding the line (in order to mount volume on boot):

- /dev/nasbackupvg/nasbackuplv /mnt/raid xfs defaults,relatime 0 4

I also performed some performance metrics of all attached drives:

- sudo hdparm -tT /dev/sdX and dd if=/dev/zero of=output.img bs=8k count=256k:

- Seagate 3TB (attached to Marvell controller)

- Timing cached reads: 1000 MB in 2.00 seconds = 500.18 MB/sec

- Timing buffered disk reads: 320 MB in 3.00 seconds = 106.52 MB/sec

- 2147483648 bytes (2.1 GB, 2.0 GiB) copied, 39.3878 s, 54.5 MB/s (for RAID setup Toshiba+Seagate)

- Toshiba 3TB (attached to Marvell controller)

- Timing cached reads: 1002 MB in 2.00 seconds = 500.75 MB/sec

- Timing buffered disk reads: 326 MB in 3.01 seconds = 108.44 MB/sec

- 2147483648 bytes (2.1 GB, 2.0 GiB) copied, 39.3878 s, 54.5 MB/s (for RAID setup Toshiba+Seagate)

- Toshiba 6TB (both attached to VX800 controller)

- Timing cached reads: 1012 MB in 2.00 seconds = 505.75 MB/sec

- Timing buffered disk reads: 528 MB in 3.00 seconds = 175.78 MB/sec

- first HDD: 2147483648 bytes (2.1 GB, 2.0 GiB) copied, 18.6864 s, 115 MB/s

second HDD: 2147483648 bytes (2.1 GB, 2.0 GiB) copied, 15.9705 s, 134 MB/s - Kingston SSD (attached to Marvell controller)

- Timing cached reads: 982 MB in 2.00 seconds = 491.40 MB/sec

- Timing buffered disk reads: 324 MB in 3.01 seconds = 107.47 MB/sec

- 2147483648 bytes (2.1 GB, 2.0 GiB) copied, 36.0755 s, 59.5 MB/s

Network aggregation

Network aggregation reconfiguration was required as well as a result of hardware changes. Instead of using AD3INLANG-LF daughterboard with three Intel 82451PI network controllers, I utilized the integrated Realtek 8111C controller and PCI attached D-Link DGE-528T network card with Realtek 8169C NIC. The integrated one was named as ens1 and the D-Link one as enp4s3, so configuration was the following:

- nmcli con delete bond0 # deleting previous interface

- nmcli con add type ifname bond0 bond.options "mode=802.3ad"

- nmcli con add type bond ifname bond0 bond.options "mode=802.3ad"

- nmcli con add type ethernet ifname ens1 master bond0

- nmcli con add type ethernet ifname enp4s3 master bond0

It worked as expected but the transfer speed reduced to 40-43 MB/s compared to >50MB/s which Intel NICs were usually achieving. Regression was expected and observed in the past, acceptable for current usage scenarios.

|

| D-Link DGE-528T |

Conclusion

In general, the plan went quite successfully. I managed to reach all the goals with minimal spendings and effort. They were few issues like multiple attempts to attach daughterboard properly, Marvell firmware RAID creation failure but I managed to circumvent them in one way or another. Some performance metrics are not stellar but they are sufficient for home NAS usage at present day. Though, I believe that it is the last major hardware update around this motherboard, since I am reaching the limits with this decade old platform. The next step would require more modern solutions but by that time the current system will still serve me well.

| |

| Arrangement of PC and hard drives |